Earlier this week I was listening to a webinar hosted by the Chronicle of Higher Education and a throwaway comment by one of the participants caused a lot of stir - he casually mentioned one university’s AI use policy that was considering an imposition of the same rules for faculty and staff as it did for students. In the chat sidebar, there was a flurry of indignation. The pushback centered on the key distinction that adults use AI as a tool for work while students need to develop their writing skills independently. This disconnect raises an interesting question.

What is the “cheating” framework for adults who use AI in their professional role?

Do Adults Cheat with AI?

We’ve all seen the embarrassing headlines detailing the revelations when an employee is “caught” using AI in a published document. Whether it’s the now infamous legal brief with fabricated cases or the recent fake AI summer book recommendations that ran in the Chicago Sun-Times and Philadelphia Inquirer, these instances only became public because the authors failed to fact check. Had those hallucinations been flagged and corrected, no one would have been the wiser.

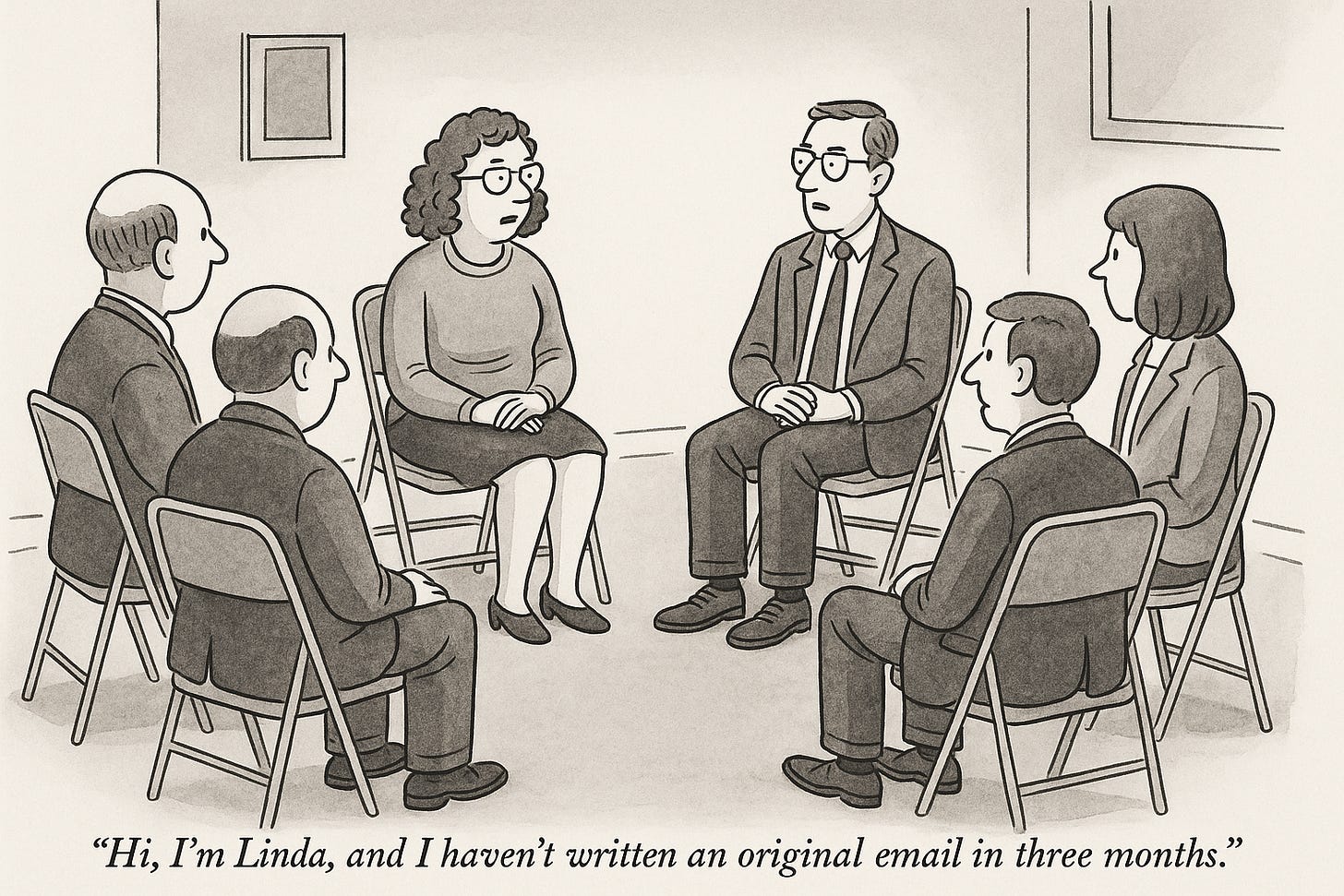

What doesn’t make headlines are the millions of AI written or AI-assisted texts that are transmitted through offices worldwide every hour. From pure AI output with minimal oversight to AI assistance with a human in the loop, employees, including faculty, clearly use these tools extensively in their daily workflow. Many employers now demand it.1

Are all these adults “cheating”? If an AI assisted legal memo benefits a client or an AI written grant application secures funding, by definition, aren’t those successful outcomes for the authors or creators of those documents and the companies or institutions that employ them?

If AI generated emails and meeting summaries more efficiently inform their recipients than any human could, or AI research leads to better business or academic decisions, isn’t that fulfilling AI’s workplace promise?

Professional work product is evaluated on its effectiveness, utility, and results - not process. Why and how adults write in their professional capacity serves fundamentally different purposes than student academic writing.

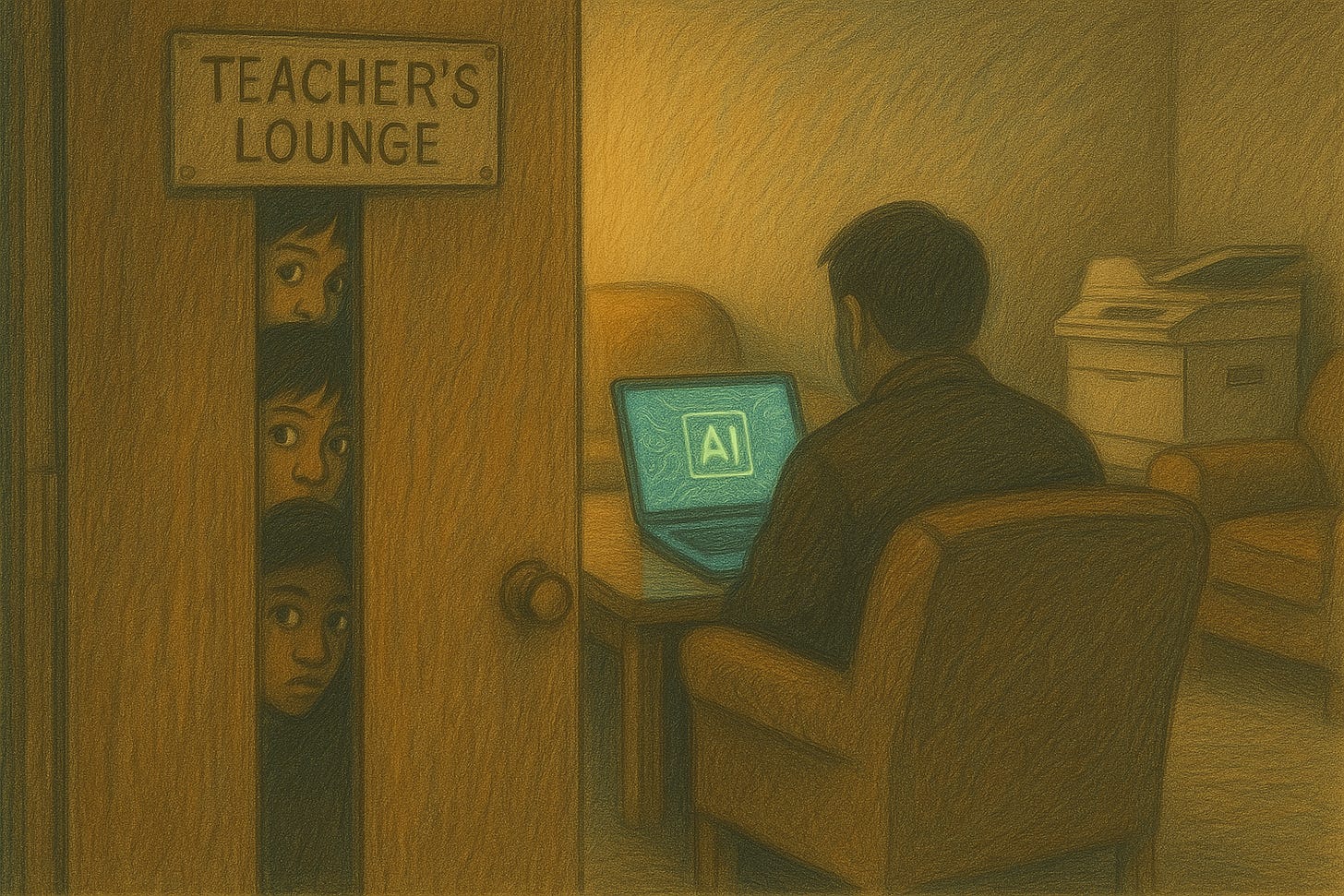

Students See the Hypocrisy

Students understand this disconnect all too well.

"The idea that when students turn to AI, they're being lazy and cheating completely enrages me, because that's not true." Allison Abeldt, Kansas State University undergraduate

McMurtrie, Beth. "AI to the Rescue." The Chronicle of Higher Education, 20 June 2025.

She and her peers describe using AI as "an aid and tutor" to streamline assignments, compensate for poor teaching, and manage busy schedules while working to pay for college.

How different, really, are these uses from professional AI applications?

What is the purpose of college work for many students?

Of course, teachers argue that assignments are designed to improve their craft, master content, and hone a critical communication ability – perhaps the most important human communication ability – in the hands of a skilled and empathetic instructor.

However, since most students won't become professional writers, many view AI the same way adults do. School assignments become their version of professional work.

For them, the goal of a piece of writing (or any other graded assessment) is not necessarily to become a better writer. It’s to get a better grade. We may wish this weren't true, but as long as we assign evaluative scores to student work, many will view the exchange as purely transactional.

And if AI helps accomplish that goal, so goes student logic, much like the employee who wants to produce a useful memo or generate a slick slide deck, why shouldn't they use it?

The stakes are different when students use AI versus when adults use it.

When lawyers and writers fail to fact-check their AI output, the consequences can be severe - damaged reputations, professional sanctions, and lost revenue. But notably, they aren’t penalized for using AI; they are penalized for using it poorly. Lawyers faced the judge’s wrath not because they turned to ChatGPT, but because they didn't proofread their work. The newspapers faced backlash not for employing a writer who used AI, but for publishing unvetted recommendations.

Students, meanwhile, face academic penalties simply for AI use itself, regardless of quality or verification. The business world punishes incompetent AI use while it rewards effective AI use. The academic world tends to punish AI use categorically.

Is it any wonder that students are so quick to point out the hypocrisy of teachers and other adults who use AI?

The Writing Teachers’s Dilemma

"Things are changing in front of our eyes in the classroom … [S]ome colleagues are still going in and teaching the way they always did, and in actual fact, students are learning very differently in many ways." Linda Dowling-Hetherington, who studies student AI use at University College Dublin.

While faculty across most disciplines are dismayed at student AI use, the most vocal opposition to AI understandably comes from writing instructors.

Many humanities teachers emphatically reject AI solely on philosophical grounds, but writing teachers may have an even more powerful reason. Many likely avoid it because they recognize the cognitive dissonance of using a thinking substitution tool when they’ve dedicated their professional careers to the concept of writing as a “process.”

Every other Substack post discussing reasons why we should not allow students to use AI to help them write makes some version of the following points:

Writing is thinking.

I only know what I am thinking when I write.

Struggling to find the words to express yourself is the essence of learning to write.

All of this is true.

This is why providing AI-free writing opportunities for students remains essential.

Unlike in office environments where results matter most, for students the struggle from idea to final draft is the point.

But here's the problem: if we can't convince students that the writing process has value beyond grades, they'll keep treating assignments like work tasks to optimize with AI.

Different Rules, Different Reasons

The faculty in that webinar chat recoiled at the thought of AI restrictions on their own work. Interestingly, what wasn't discussed was the actual policy the school was considering—was the intention to design one that was more restrictive for faculty or more permissive for students?

No doubt many wish we outlaw AI entirely and return to some romanticized version of a technology free campus. This pollyannish view is both unrealistic and wrongheaded.

And while students who cry "hypocrisy" about differential AI policies have a point, they're missing crucial context. Society already maintains different standards for adults and minors. Students under 21 can't drink alcohol on campus and those under 18 can't vote or serve in the military. We may eventually reach a stage where AI is regulated similarly by age, but for now, the question is whether different rules around AI usage serve legitimate educational purposes and professional realities.

Beyond Prohibition and Detection

The current debate shouldn't center on whether adults or students should use AI. Hammering away at the evils and drawbacks of AI may be satisfying, but won’t magically make it disappear. In our digitally connected world, AI tools are here to stay.

This doesn't mean teachers must adopt AI in their classrooms. But they do need to talk openly with students about AI use and experiment enough with the technology to understand what students are actually doing.

Talking about AI means more than merely reviewing AI policies. It means creating space for meaningful dialogue where students can share their perspectives without fear of reprisal. Students will take their cues from the tone and attitude of their teachers. Many will continue to use AI no matter what, but showcasing your ignorance or demonizing the technology will only make them tune you out or, worse, reveal how easy it will be to use it in your class.

This approach also requires educators to be honest about their own relationship with AI. Modeling responsible use might include explaining why you don't use AI or it could mean demonstrating how you verify AI output when you do use it. The key is thoughtful discussion.

The path forward cannot simply be more AI detection tools and stricter policies. Ultimately it will mean designing learning experiences students actually value for their own sake, not just their transcripts. But until we bridge this gap between our pedagogical intentions and the student reality, the "rules for thee but not for me" dynamic will persist, and many will just stop asking: Why can't we use the same tools you do?

Amazon’s CEO recently made clear that Amazon workers were expected to be using it. Gallagher, Dan. “The Real Message Andy Jassy Is Sending to Employees on AI.” The Wall Street Journal, 19 June 2025

This nails the quiet tension so many students feel but rarely say out loud: “We’re just doing what you taught us.”

Not in some abstract moral sense—but in a daily, visible way. When adults use AI, it's efficiency. When students do, it’s dishonesty. But both are using the same tool to navigate overloaded systems and high-stakes outcomes.

The question isn’t whether students should struggle through writing. The question is whether the struggle has purpose—or just weight. Because when process becomes performance, students treat it like any other task to optimize.

What if the problem isn’t that students are cheating—

but that they’re mirroring?

And what if the real discomfort is that they’ve learned from watching us?

We don’t need more restrictions. We need better conversations. Ones that treat students not as problems to police, but as co-authors of the future they’re inheriting.

Wow - the solution can't boil down to creating a list of what's appropriate for students to use vs. what faculty can use.

Educators in HE really need to start thinking about how they're going to integrate this technology into every class.