The AI Curriculum That’s Still Missing

Schools tackled DEI, mental health, polarization, and climate - why not the technology that intersects with them all?

The school seems to believe that if they bring it up and talk to us about it, even more people will use it, but I think that if we’re going to exist in a world with artificial intelligence, everyone needs to be educated about it.

Over the past decade, schools have moved swiftly to address major social, cultural, and political crises. In the wake of George Floyd, they created and rapidly implemented DEI programming across grade levels and disciplines. As anxiety and depression surged during COVID, they invested in mental health awareness and sensitivity training. Race and gender discrimination, political polarization, and climate change concerns led to assemblies, guest speakers, and entire curriculums designed to educate and inform. But when it comes to artificial intelligence - the most transformative technology students are likely to encounter in their lifetimes - most schools are oddly silent. That silence is especially significant because AI isn’t a separate issue. It’s a force multiplier that will shape virtually every concern schools already prioritize: inequality, mental health, civic engagement, and environmental sustainability.

The Elephant in the Building

Despite AI continuing to dominate headlines, most schools still treat it like a taboo.

When AI is mentioned at all, it’s almost always in the context of cheating. That’s a failure of imagination and a missed opportunity. Students like Sam Barber aren’t just asking for permission or prohibition on whether to use AI. Today’s students need more. Every newspaper and magazine is running stories about how AI is already upending the economy, human relationships, the environment, and what it will mean to live in a world surrounded by powerful AI.1

And yet, AI is still whispered about among teachers (though rarely in front of students), as if simply naming it might encourage its misuse, almost like a disease one might catch.

Schools Can Move Fast - When They Want To

What stands out this fall is the stark contrast between how schools have responded to other urgent social issues with how they’re reacting (or not reacting) to AI. While academic integrity policies are being upgraded, that’s usually where the conversation stops. Most schools aren’t talking about AI as the transformative cultural, historical, and economic force it is, even as it restructures the industries and institutions our students are preparing to enter.

Outside the classroom, AI is drawing trillion-dollar investments2, decimating jobs, and raising alarms at the highest levels of government. The recent publication of If Anyone Builds It, Everyone Dies, a new book co-authored by the influential AI intellectual Eliezer Yudkowsky, clearly outlines the stakes. Regardless of whether you are an AI doomer, optimist, pragmatist, or somewhere in between, shouldn’t students at least understand what the debate is about?

If we’re willing to introduce complex conversations around race and inequality, mental health, democracy, and climate, why are we so hesitant to position AI within a similar framework? The AI era is already upon us and it deserves its own seat at the curricular table. Perhaps the hesitation stems from not seeing the connections so let me make them explicit.

AI Isn’t the Next Issue. It’s All of Them At Once

Inequality

Start with inequality. AI threatens to deepen existing wage gaps by automating middle-class jobs while continuing to consolidate power at the top. While the most hyperbolic and dire predictions are calling for existential levels of job loss, even an unprecedented wave of white-collar displacement - say 20% - could trigger an economic shock rivaling the Great Depression. At the same time, AI systems are increasingly used in hiring, sentencing, and financial decisions, often replicating or even amplifying existing societal biases. These are the kinds of questions we already explore in conversations around systemic discrimination. An AI curriculum would give students another lens to understand how bias operates not just in people, but also through algorithms and data.

Mental Health

Then there’s mental health. From AI romantic companions marketed to lonely young men, to students seeking emotional and therapeutic support from chatbots, the psychological effects of AI dependency are already apparent. As I wrote last week, and as Maureen Dowd noted in the New York Times this past weekend, we’re seeing a generation at risk of substituting digital intimacy for real human connection. The long term consequences could be profound. Since schools are already invested in helping students build healthy relationships and managing their mental well-being, examining the psychological effects of AI can be a direct extension of those efforts.

Political Polarization

And of course, there’s polarization. Like social media before it, AI systems are engineered for persuasion, engagement, and manipulation. But as the tools become even faster, more personalized, and harder to detect, and AI-generated content becomes indistinguishable from reality, including ultra-realistic deepfakes,3 the risks to political discourse, voting behavior, and public trust grow exponentially. In a time when schools already emphasize media literacy and critical thinking, it’s even more essential that we help students recognize and navigate this new terrain.

Climate and Sustainability

Finally, AI’s environmental toll may be the most pressing concern for students who’ve grown up during climate strikes and school walkouts. The energy demands of massive data centers, the race to build ever-larger models, and the strain on global infrastructure raise serious questions about sustainability. The carbon cost of AI isn’t a separate issue. It’s part of the same climate story students already care about. Ignoring this link in the curriculum misses a golden opportunity to connect an understanding of emerging AI technologies with environmental responsibility.

The One Issue That Rules Them All …

A broad AI curriculum isn’t about classroom misuse or plagiarism. It’s much more than that. AI is the “one ring that rules them all” - it compounds nearly every issue schools have already identified as important. And it’s only going to become more relevant.

The good news is that schools already know how to do this. An AI curriculum doesn’t have to start from scratch. AI can be a prism through which we continue emphasizing the values we already say matter. The same curricular infrastructure schools built for DEI, mental health, civics, and climate can be extended here. We already know how to host speakers, lead advisory conversations, design cross-disciplinary units, and empower student-led inquiry. AI deserves that same treatment.

Students need a curriculum that helps them understand how generative AI is already transforming the world they live in - economically, socially, politically, and environmentally.

What Might an AI Curriculum Actually Look Like?

A comprehensive AI curriculum wouldn’t start with coding prompts or technical training. It shouldn’t be about “AI literacy” or “fluency.”

It might begin with essential questions like:

Who controls new technologies? What (and whose) values are embedded in the tools we build? What is intelligence? What does it mean to live - and learn - alongside “smart” machines?

From there, we could design programming that builds directly on structures we’ve already developed for other important issues:

DEI & Inequality: Hold seminars on algorithmic bias and automation’s impact on labor markets. Teach units examining who builds AI, who benefits, and who gets left behind.

Mental Health & Human Relationships: Create student forums exploring the rise of AI companions, digital relationships, and AI therapy. What are the psychological implications of anthropomorphizing AI tools? What do we gain and what do we lose?

Civic Engagement & Polarization: Develop media literacy modules to help students detect AI-generated misinformation, political manipulation, and evaluate synthetic content. What do these new technologies mean for the future of politics and governance?

Climate Change & Sustainability: Design interdisciplinary lessons on AI’s environmental costs, from the energy demands required to create large models to the creation of enormous data infrastructure centers. Are these tradeoffs worth it? How will we know and what can we do about it?

There are other AI issues we should not ignore as well:

Privacy & Data Rights: Lead discussions about surveillance technologies, consent, and what users sacrifice - whether they know it or not - when they interact with AI tools.

Regulation & Geopolitics: Share articles on the AI arms race, national security risks, regulatory responses, and how AI is affecting U.S.–China relations, both diplomatically and militarily.

We already know how to structure these conversations. Just as we’ve done with DEI or mental health programming, an AI curriculum might include:

Grade-level assemblies introducing AI’s social, ethical, and cultural impact

Guest speakers from journalism, industry, and policy sharing real-world perspectives

Advisory conversations and student-led clubs where students can explore their curiosity and concerns about AI

Capstone projects or research electives examining emerging AI technologies through historical, philosophical, environmental, and scientific lenses

An AI curriculum does not mean integrating AI tools into classroom practices. It’s about giving students the means to understand, critique, and navigate the most consequential technology of their lives in the same way we’ve tried to do with the other defining issues of their generation.

When Students Do Get to Talk About AI

This fall has revealed something important for me. It’s clear from even within the narrow confines of an AI policy discussion, students are hungry for broader conversations.

Whenever AI comes up, something predictable happens. What starts as a dialogue about academic integrity quickly becomes something else entirely. Students want to talk about the future, about how this technology is already changing the world they’re stepping into, and about what it means for their careers and society in general. “Cheating” may be the entry point, but it’s clear the conversation wants to be bigger than that. The institutional space carved out for it, however, is not.

This constraint isn’t unique to my school. The academic integrity lens has become the default (and usually only) frame through which schools engage students about AI. Not because that’s where the conversation should be, but because that’s the only formal space that exists for it.

What Student-Led Spaces Reveal

My advisory role with the student AI club has been an exception. More than a dozen students showed up to our first meeting. Most had never heard of the acronym LLM. Few understood how these tools work. But the questions came fast and we barely scratched the surface. I suspect future meetings will draw more students who want a place to air their fears, curiosities, and questions, especially because nowhere else in the building is anyone talking about this systematically.

Navigating AI in Real Time

In my Independent Research class, where we frequently grapple with how AI might fit into the research process, the conversation has opened up completely. The platforms and tools are improving so quickly that I can’t even be sure our course will look the same by the end of this academic year, let alone 2 or 3 years from now. Sharing that anxiety and uncertainty honestly allows much more productive dialogue. No one is asking how to cheat better. We’re all trying to understand what producing a lengthy, well-researched project looks like in an environment where AI can assist, distort, and replace student thinking at virtually every stage.

There is no roadmap for when or whether to utilize AI’s strengths in the face of its considerable risks. Rather than pushing the issue underground, we need to keep it out front. It will be messy and require constant re-evaluation. But I don’t see any other way.

Policies Will Not Be Enough

What I’m learning is that, while policies matter, they will not by themselves chart the path forward. Guardrails and consequences are obviously necessary, but it’s the willingness of adults to talk with students and not around them, that will define how well they navigate the social, ethical, and academic issues raised by AI.

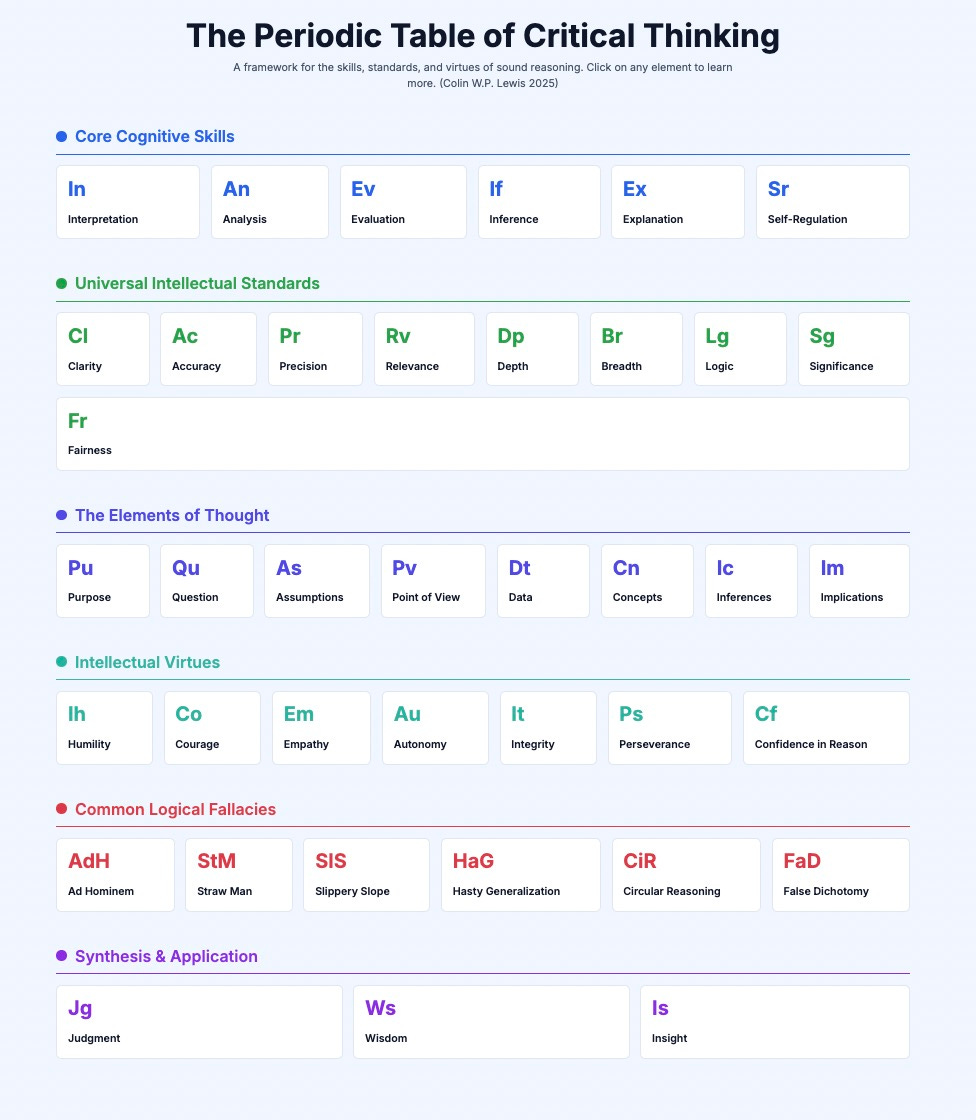

One of the resources I used to help anchor these conversations is Colin W.P. Lewis’s “Periodic Table of Critical Thinking.” It asks us to identify the cognitive and emotional skills that AI can’t replicate. Students must understand the difference if they want to preserve their creative and intellectual autonomy. We’ve talked honestly about where AI might help, and when it will likely hurt, their learning over the long term.

That honesty builds trust. When we de-center the AI conversation away from “cheating,"it changes the dynamic. It invites students into a much deeper inquiry, not just about school and academics, but about their future in a society infused with AI.

They’ve been waiting for that invitation.

Meeting the AI Moment

When schools have felt compelled to act, they’ve moved quickly. They found time to confront racism, mental health, political division, and climate change. The infrastructure exists. The pedagogical models are proven.

What’s missing is the will to recognize that generative AI belongs in that same category - not as a technical skill to master, but as a transformative technology we all need to understand.

The current impacts are already undeniable: economic disruption, manipulated information ecosystems, psychological dependency, and environmental costs. Whether AGI arrives in two years or twenty, students are living through an AI revolution right now. They deserve more than silence punctuated by academic integrity warnings.4

If we had the courage to talk about race, trauma, politics, and climate, we have what it takes to talk honestly about artificial intelligence. The question is whether we’ll start before it’s too late.

A forthcoming book, AI and the Art of Being Human by Jeffrey Abbott and Andrew Maynard, is exactly the kind of text that might anchor a student-facing AI curriculum.

A recent Economist cover story asks: “What if the $3trn AI investment boom goes wrong?”

If you have not heard of OpenAI’s new video model Sora 2, you will almost certainly see examples in the coming days and weeks. It’s bonkers.

Of course, some educators and schools are engaging thoughtfully with AI. But, from my vantage point, these efforts remain largely focused on AI as a classroom tool rather than as a cultural force requiring its own curriculum. Though I’m sure it exists, I have yet to see a school-wide AI curriculum that treats the technology with the same systematic, multi-disciplinary approach we’ve developed for DEI, mental health awareness, or climate education. If you know of comprehensive AI curricula that fit this description, I’d welcome hearing about them in the comments.

Great well rounded article! Thanks ! I am new to the AI discussion, seeing the tip of the iceberg , trying to learn a lot, fast. But I’m wondering… how many schools still have the liberty to discuss race, inequality and climate change, let alone how AI will impact those areas? DEI initiatives have been erased at public schools in my conservative state. The legislature is very involved in the curriculum at all levels. How long will teachers retain the freedom to work these topics in?

Hi Stephen, a colleague from the humanities (I myself am an AI researcher and educator) surfaced your writings for me. I have started to share on my substack the curriculum of my new Mason Core course, UNIV 182 I am teaching this Fall 2025. I designed this course to be an introductory experience, but students get to see all aspects: history, a bit of theory and math, some light coding (with no coding background), prompting, building "agents" with no code, evaluating, and critiquing, lots of critiquing. The series is "Journey through AI: Weekly Lessons from the Undergraduate Classroom." Hoping to connect with you.