Encirclement and Attrition: ChatGPT’s Study Mode Isn’t Just a Feature — It’s a Strategy

A Wolf in Sheep's Clothing

In 1931, the NAACP and Thurgood Marshall launched a legal strategy to dismantle Jim Crow known as “encirclement and attrition.” Borrowing a term from the military, it described a deliberate campaign to surround entrenched positions and apply pressure from every side until they cracked.

Reading OpenAI’s announcement of its new “Study and learn Mode,” I couldn’t help but think of that metaphor.

Though the stakes are radically different, the tactic feels eerily familiar. While the new feature seems like a welcome addition to its toolbox, the reality is a full-frontal bid to surround traditional education and become the first, fastest, and most trusted source for student learning.

On the surface, OpenAI’s announcement brims with optimism. It’s a celebration of how AI might help students “study and learn.” But beneath that upbeat tone lies something much more calculated. Whether intentional or not, Study Mode feels like an attempt to ameliorate a problem they themselves created nearly three years ago, when ChatGPT dropped into schools with no warning, guardrails, or guidance. Now, they’re offering themselves as the first stop for students facing each and every academic challenge. Not their teachers, not their peers. But AI.

Socratic-style chatbots aren’t new. Since LLMs went mainstream in 2023, platforms like Khanmigo and Socrat.ai have offered “tutor modes” designed to walk students step-by-step through problems.

Some work better than others, but they all share one key trait: they’re educational tools adopted by institutions, vetted by teachers, and used under some level of professional oversight. Students did not seek these out on their own. Why would they with free AI available with no restrictions?

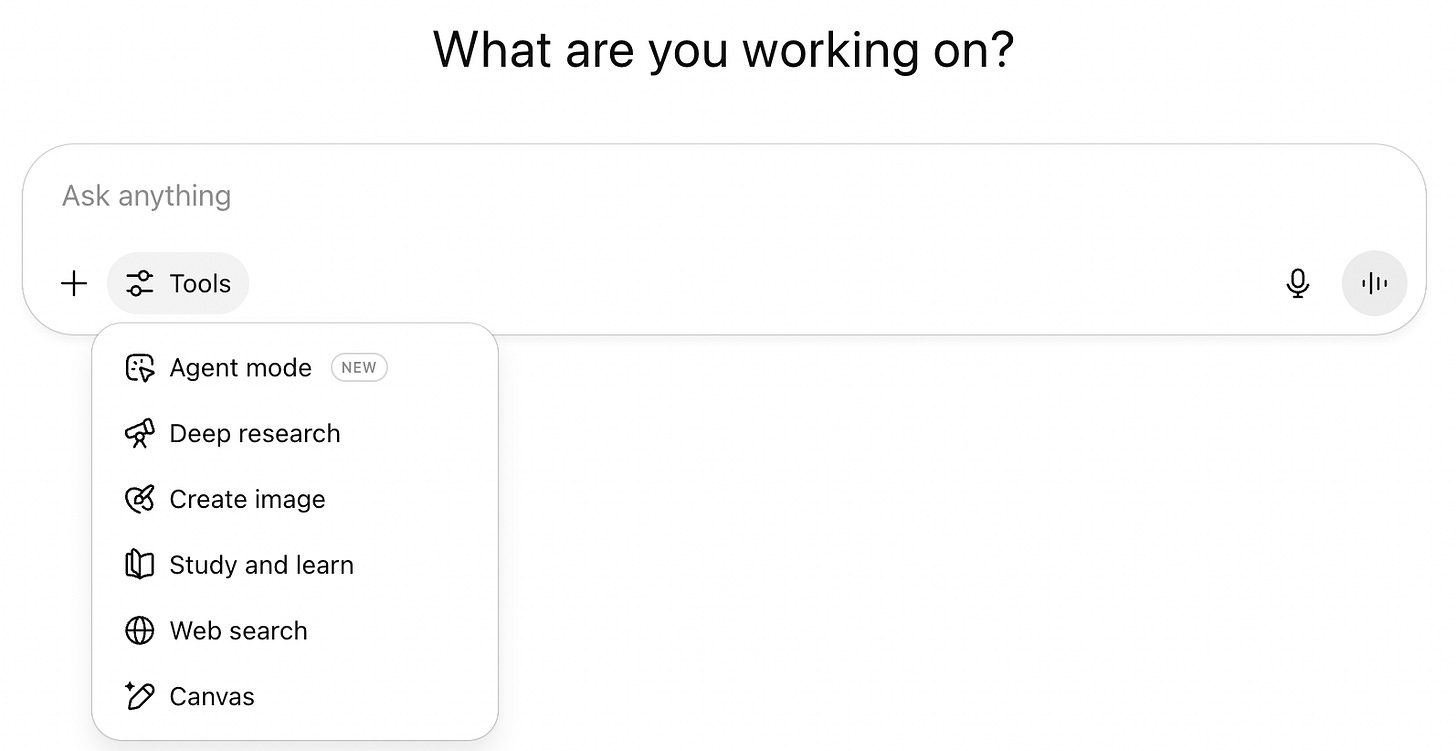

ChatGPT’s Study and learn Mode is different. It’s not a wrapper or a school-approved tool. It’s not a GPT buried under the hood of its digital infrastructure. It’s a promoted feature that shows up in the drop down menu of the world’s most popular AI platform and is aimed directly at students.

OpenAI as Educator: A New Positioning

That’s a new branding. When OpenAI adds a Socratic tutor to its core product, it’s a signal that ChatGPT intends to be the preferred destination students go when they’re stuck.

And as the 800-pound gorilla in the AI arms race, OpenAI’s move will almost certainly be copied. Gemini, Claude, and every other player fighting for education market share will roll out their own versions. What we’re watching is the quiet and insidious normalization of AI as the default learning environment but without the structures or choices schools usually rely on to guide that process.

“Study mode is designed to be engaging and interactive, and to help students learn something—not just finish something.”

OpenAI is no longer hiding its influence on student learning. It’s courting the market. Three years after launching a product that detonated student assessment as we knew it, OpenAI now arrives with a press release positioning itself as both the culprit and the savior.

In their official statement, OpenAI declares:

“ChatGPT is becoming one of the most widely used learning tools in the world… But its use in education has also raised an important question: how do we ensure it is used to support real learning, and doesn’t just offer solutions without helping students make sense of them?”

And they follow that admission with a carefully curated set of testimonials, all from college students:

“The best way I’d describe it is a live, 24/7, all-knowing “office hours.” —Noah Campbell, college student

“Study mode did a great job breaking down dense material into clear, well-paced explanations.” —Caleb Masi, college student

“I put study mode to the test to tutor me on a concept I have attempted to learn many times before: sinusoidal positional encodings. It was like a tutor who doesn’t get tired of my questions. After a 3-hour working session, I finally understood it well enough to feel confident.” —Maggie Wang, college student

Notice what’s missing: teachers, peers, classrooms, collaboration. In OpenAI’s vision of academic support, the human element is entirely erased. It’s an aggressive play to own the learning experience itself.

It’s Entire Premise is Based on Magical Thinking

Those cherry picked quotes aren’t data. Show me the evidence that students actually want to learn this way. Nothing in the reports, surveys, or other stats recently discussed in the Chronicle of Higher Ed suggests that what students are looking for is a more thoughtful chatbot that engages them - and slows them down. They desperately want the adult humans in their lives to help them understand AI, not turn over their learning to it entirely.

They turn to ChatGPT for efficiency, speed, and an open-ended sandbox, much like the rest of its 800 million weekly users.

The problem is that, unlike purchased AI wrappers like Magic School, Flint, or SchoolAI, ChatGPT lets users toggle between Study Mode and standard mode at will, putting the burden entirely on students to self-regulate their AI use. If the past year has taught us anything, that’s an unreliable bet.

That puts teachers and professors in a new bind. Some will continue the impossible and attempt to ban AI entirely. But others may cautiously endorse Study Mode, assuming it curbs misuse. The irony? Recommending study mode to students might be the first step in acknowledging our own superfluousness.

I briefly test-drove Study mode and, from a teacher’s perspective, the Socratic structure and content-rich prompts are thoughtful and well-designed. But this observation reinforces the teacher fantasy that students want to learn through the methodical, Socratic, “good struggle” embedded in the chatbot’s customized instructions.

When the reality still allows a one-click return to the shortcut version, more student use risks more misuse, not less, at least in the short term. Even students who intend to use it as designed will need training and guidance to ensure they don’t get frustrated and immediately shift to the default model. I don’t see that becoming the norm anytime soon.

Until higher education confronts the elephant in the room, “study mode” may be offered as a panacea but it cannot possibly solve the crisis on campus regarding AI generated student work. It will actually make it harder this fall to keep kids away from using AI.

Study Mode also provides a clean on-ramp for students who have resisted AI precisely because of its association with cheating. Now, they may feel they have institutional “permission” to use it. And once they get started in Study mode, it’s an easy slide to more and more automated solutions.

Designing for Instruction

Given that both Gemini and OpenAI recently earned gold medals in the International Math Olympiad, it’s hard to argue these tools can’t support college-level instruction. The capacity is clearly there.

As Marc Watkins recently observed,

“AI is now being used by students to learn the material we want to see students show us within those proctored assessments. Learning itself, not simply assessment, is now being impacted by AI.”

Some Things Need to Be Grown, Not Graded, and Definitely Not Automated

And Study Mode is OpenAI’s attempt to accelerate that reality.

Over the past few months, I’ve had numerous conversations with students and educators, and one theme keeps surfacing: when instruction is weak, whether unclear, disengaged, or just plain bad, students don’t sit by idly. They turn to AI.

That’s the uncomfortable truth Study Mode forces us to reckon with: if a chatbot can teach something more clearly than a teacher and is available anonymously and 24/7, they’ll make the switch.

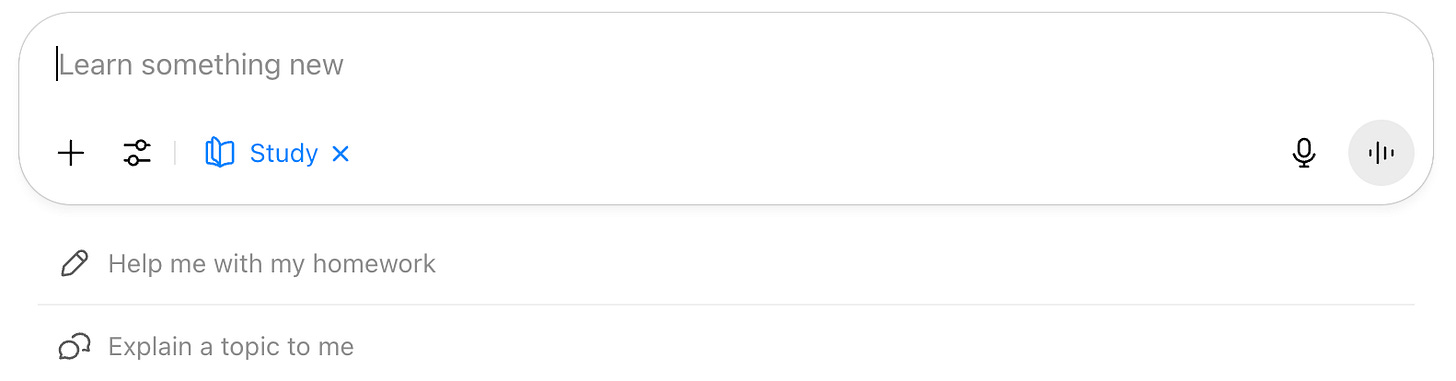

It’s an open question whether Study Mode will make learning easier or more effective (we have no idea yet), but it does represent a major shift in OpenAI’s messaging. The first two default prompts alone make the company’s intentions clear:

“Help me with my homework.”

“Explain a topic to me.”

The implication is unambiguous: Skip the struggle, skip your teacher, skip your friends. Ask the bot.

It’s November 2022 all over again but this time as a Trojan horse. It isn’t because the models have taken a quantum leap, but because OpenAI is doing damage control while at the same time squeezing itself further into the education lane.

The Final Play

Study Mode is well-intentioned if a bit naive. But it’s only the opening move with an end game in mind.

As OpenAI puts it:

“This is a first step in a longer journey to improve learning in ChatGPT. We plan on training this behavior directly into our main models once we’ve learned what works best through iteration and student feedback.”

They are not being subtle nor hiding their ultimate goal. Study Mode is the prototype for how OpenAI wants all student learning to function. And if they get students hooked - whether they use Study mode or not - it’s a win.

They’re already partnering with Stanford’s SCALE Initiative and launching education-focused research through their NextGenAI program.

As we run longer-term studies on how students learn best with AI, we intend to publish a deeper analysis of what we’ve learned about the links between model design and cognition, shape future product experiences based on these insights, and work side by side with the broader education ecosystem to ensure AI benefits learners worldwide.

It sounds helpful. But is there any scenario where OpenAI decides their approach doesn’t work? Will they reverse their position if the data suggests otherwise?

Unlikely. The incentives are entirely misaligned. Like studies about cancer sponsored by tobacco companies, any research about student learning that OpenAI helps fund, direct, or analyze can’t be taken at face value.

I'm skeptical that students will even know Study Mode exists, let alone adopt it as their default. Releasing the feature in late July, just before schools reopen, feels less like a genuine educational intervention and more like a preemptive PR move.

But let’s say it does work. Let’s say students start learning better, faster, and deeper through a chatbot trained on pedagogical best practices. Then what?

If a model can explain material more clearly than a teacher…

If it can personalize instruction more effectively than a curriculum…

If it becomes the first place students go when they need help …

Then what exactly is the role of a school? A teacher? A class?

There may be enormous potential in AI-powered learning, especially for students without access to quality instruction or support. But that potential can’t be realized or trusted if the majority of educators are constantly playing catch-up to features they didn’t ask for, launched without their knowledge, and implemented without discussion.

Study Mode represents a bold move from the power player to further draw students into their vision of a new educational future, one in which AI becomes the focal point of the learning experience. Like an anaconda slithering forward and circling its victim, AI companies are preying on an outdated and vulnerable educational system whether we like it or not.

This is a really balanced, thoughtful (and helpful) analysis, Stephen. Thank you.

I think your point about it putting the onus on the students to self-regulate is crucial in all of this. You suggest it is unlikely and that absolutely chimes with my classroom experience.

I think the Socratic approach has merit and it's in line with the way many teachers are trying to use AI to enhance learning rather than to shortcut to a final product - but the subtext here, as you rightly point out, moves towards teacher replacement.

Fundamentally, I think humans are motivated to learn by other humans. When I'm feeling optimistic, I think that impulse will mitigate ChatGPT's educational land grab. In darker moods, I fear we will lose sight of it and be swept away!

Steve, this is terrific. I consider myself someone who attempts to stay fairly up-to-date with AI and I didn’t even realize that this new mood was being released.! I think one of your most important points is your recognition of how difficult or impossible it’s going to be for students not to toggle out of tutor mode and get the answer key. We see how much difficulty students have with regulating their use of social media and it’s crazy to expect that there will be any more capable of regulating how they use AI. It’s not even August and I already feel exhausted. lol